Stop Emphasizing the Data Catalog

In the modern data stack, managing data assets effectively is one of the most important things you can do for your organization. When I talk to many people in the industry, startups, data teams, and experts, they keep telling me how important it is to build a data catalog, track data lineage, and understand the data mesh. Bottom line, I think those will all be commoditized soon (if not already) as more and more modern solutions are building all of those capabilities integrated as part of a much larger offering.

Very often, the term Data Catalog comes up, and every time people describe it as a static solution to understand their data. I rarely hear how this solves a business problem and what’s the approach to dynamically and automatically keep this information up to date to be able and efficiently consistently serve the business owner.

So let’s examine what the options are.

The data catalog approach

A classic data catalog is a tool that provides a centralized metadata repository that describes an organization's data assets. It should enable easy discovery, understanding, and use of these assets. One of the most significant benefits of a data catalog concept is that it provides a unified view of all the data assets within an organization, including databases, tables, columns, files, and data models. The metadata in a data catalog includes information such as the data source, data type, data quality, ownership, and access controls. This can help organizations manage their data assets effectively and enforce data governance policies by providing a single source of truth. For example, a healthcare organization can use a data catalog to ensure that patient data is accessed only by authorized personnel and that the data is of high quality and accuracy.

However, while data catalogs have many benefits, there may be better solutions for modern organizations, and they come with many downsides.

Let’s face it, data catalogs can become quickly outdated and may not reflect the current state of the data assets within an organization. This can lead to confusion and errors, as users may be relying on outdated information. In addition, data catalogs can be complex to set up and maintain, requiring significant time and resources from the data engineers.

Another argument against data catalogs is that they can stifle innovation and creativity by dictating how data should be organized and categorized. This can limit the ability of users to find new insights and relationships in the data. Moreover, data catalogs can restrict the flexibility of users to query and analyze data in new and innovative ways.

A data catalog typically uses automated processes to discover, profile, and tag data assets. Once the metadata is collected, the data catalog provides a searchable interface that enables users to find relevant data assets based on keywords, tags, or other criteria. The data catalog can also provide information on accessing and using the data, including data preparation steps and integration guidelines.

Creating and maintaining a classic data catalog can be time-consuming, static, and expensive. It requires significant effort and resources to collect and curate metadata and to ensure that it is up-to-date and accurate. In addition, data catalogs can be difficult to implement, primarily in organizations with significant and complex data environments. Sometimes I see organizations that are less sophisticated maintain their catalogs in unmanaged ways, such as using spreadsheets or a shared document that is neither maintained nor easily understandable by business users, which is, frankly, a wrong solution that gives the false impression and which also makes it extremely difficult for business users to understand.

So what’s the alternative?

Use the modern dynamic data semantic layer instead of catalogs, relationships, and lineages.

When using a data semantic layer and real-time information, static data catalogs may not be as useful. A data semantic layer provides a single source of truth for the entire organization, including real-time information, and allows for greater flexibility in querying and analyzing data. This eliminates the need for a static data catalog to dictate how data is organized and categorized. Moreover, a data semantic layer can provide automated data discovery and integration, eliminating the need for a static data catalog to manually discover and catalog new data sources.

In new semantic layers, key business metrics are defined non-repetitively by the business and data teams as code (logic). The goal is to abstract metrics so business people can discuss with data engineers and transfer ownership closer to the domain experts. The metrics are higher-level abstractions that are stored in a central repository in a declarative manner.

LookML from Looker is one of the most famous semantic layers abstracts the model definition from the visualization layer. However, it forces you to implement it together with the specific looker visualization layer.

The most commonly named tools are Cube, MetricFlow, MetriQL, dbt Metrics, or Malloy.

In the example of dbt, it focuses on empowering data teams to automate their data pipeline and provide a single source of truth for their data.

dbt places a strong emphasis on metrics as a top priority. This focus on metrics as a first-class citizen is driven by the belief in accessibility. By naming metrics in a clear and concise manner with a clear business meaning, and by prioritizing metrics at the top of the configuration, dbt is shaping the API and access patterns for the semantic layer. The choice of the convention will have a significant impact on what is presented to consumers of the semantic layer.

There are other dynamic solutions out there, and many legacy vendors are trying to move in that direction for a good reason. The semantic layer is a powerful solution that addresses a range of issues in data management and analysis. It streamlines messy and error-prone processes, such as spreadsheets and other models, by establishing a better method to define and use business concepts with a single source of truth. This helps to avoid duplication of work across different BI/Analytics tools, experimentation tools, ML Models, and more.

In addition, the semantic layer empowers stakeholders by allowing them to self-service more quickly and handle simple requests themselves, reducing the reliance on analysts as data gatekeepers. It also enables deeper self-service by creating a system that can handle most ad hoc requests.

By transforming business data into recognizable terms, the semantic layer provides a consolidated and unified view of data across the organization, establishing a standard approach to consuming and driving enterprise-wide analytics. The benefits of a semantic layer include: empowering data analytics and machine learning for all users, establishing a single source of truth, streamlining model development and sharing, enhancing query performance and reducing computing costs, minimizing data cleaning effort, and improving security and governance.

Data discovery provides a comprehensive and dynamic understanding of your data based on how it is being ingested, stored, aggregated, and utilized by specific consumers. Unlike a data catalog, data discovery presents a real-time understanding of the data's current state, rather than its ideal or "cataloged" state.

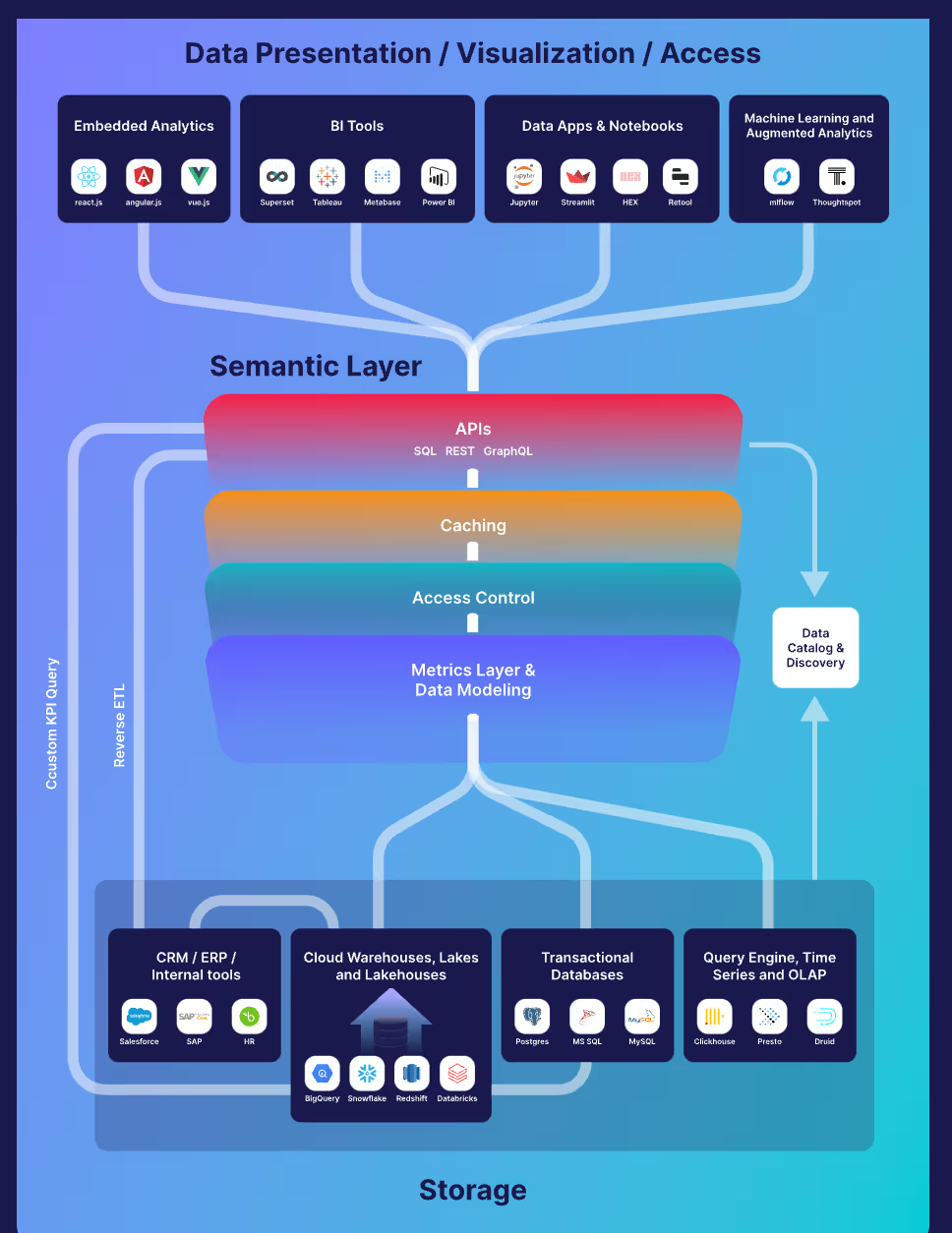

I recently came across Simon Späti excellent and extensive post about The Rise of the Semantic Layer: Metrics On-The-Fly. I really think he illustrates how a semantic layer plays such an essential role in the modern data stack - all credit to him.

Data discovery also leverages governance standards and centralized tooling across various domains, promoting accessibility and interoperability. This approach is particularly useful when teams adopt a decentralized approach to governance, holding different data owners accountable for their data as products. This allows data-savvy users throughout the business to self-serve from these products.

My friend Barr Moses summarised it really well in one of her posts:

Data catalogs as we know them are unable to keep pace with this new reality for three primary reasons: (1) lack of automation, (2) inability to scale with the growth and diversity of your data stack, and (3) their undistributed format.

I couldn't agree more!

In conclusion, while a data catalog concept is essential for any organization that wants to manage its data assets effectively, there may be better solutions for organizations in today's modern data stack. A more modern solution is a dynamic semantic layer, which provides a single source of truth for the entire organization, including real-time information, and allows for greater cross-collaboration.

Guy Fighel is SVP & GM, Data Platform Engineering & AI at New Relic.

.jpg)